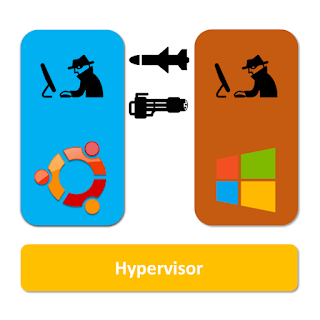

Embedded virtualization is NOT server virtualization

Back in the days when virtualization was just invented by IBM, it was intended to solve the problem of single-user operating systems (OS). By creating virtual machines (VM), multiple users could log in their dedicated VMs and perform tasks simultaneously. Today though, single-user OSes have become a history and multi-user OSes are commonly accepted. Many people can log in the same machine without the help of virtualization. Luckily, virtualization technology has found its new roles as well. Firstly, it allows an user to dynamically switching between different OSes. Secondly, it provides an environment for multiple users to share the same physical machine while each having a potentially different software setup, meaning different OSes or incompatible system configurations. One step further, it abstracts the notion of computation resources from physical machines, allowing fixed computation resources (e.g. VMs) to be dynamically allocated and be moved across data centers. This is what we ...