The foreground/background scheduling model for real-time and cloud systems

In real-time systems, the very basic task model assumes every real-time task (process) is either periodic or can be modeled as periodic one (e.g., sporadic servers). A periodic task is generally assigned three properties: period (T), worst case execution time (WCET, or C) and deadline (D). In every period T, the task needs to complete some job before its deadline D. In the worst possible case with all sorts of execution interference (e.g., interrupts, synchronization, cache evictions, memory bus contention), it takes C amount of time to finish the job. In no period would the job execution time exceed C. In normal execution though, the job time is way smaller than C. C <= D, since if the relative deadline D is smaller than C, the task will always fail in the worst case. Notice D can be greater than T, although most scheduling algorithms and analysis methods assume D <= T or just D = T. You can definitely write a web server that handles one request in every period and has D > T; but I don't see any real-world real-time application use case that would have D > T (please let me know if you know any).

Based on this model, a lot of scheduling algorithms have been proposed that try to guarantee C amount of time for a task in its every period. The common goal is to have an analyzable algorithm so that people can perform schedulability analysis. Given a set of real-time tasks and the hardware platform, a schedulability analysis is to decide whether these tasks can always run on the platform without any deadline misses (called schedulable). We also want our algorithms to be optimal or near-optimal to maximize hardware resource utilization (mostly focusing on CPUs at the moment, but can include cache, memory, etc.). The goals are easier to achieve on single-core platforms; on multicore platforms, cores can be contending for shared resources, leading to extra stalls in program execution. This means we have to come up with even more pessimistic estimate of C for every task running on multicore platforms. More resources will be reserved, thus less tasks can enter the system. During normal execution, a lot of those resources are idle and wasted. One solution is to hard partition all resources that are previously shared. Basically, this is like taking multiple single-core processors and putting them on the same chip (distributed system on a chip). Whether this is going to be the future, I don't know. But in this article I want to talk about another solution where hardware resources are still shared amongst cores. In this solution, we would like to make use of the idle resources caused by pessimistic estimation of Cs and avoid the worst case execution as much as possible.

To begin with, let me introduce the notion of VCPU (not in the context of virtualization). A VCPU is a resource container that is used to control the CPU allocation to tasks. When we put a real-time task into a VCPU, we want to assign its real-time properties to the VCPU. To simplify the discussion, I will assume D = T, so only two properties (C, T) are given to the VCPU. Then a real-time scheduler keeps track of VCPUs' statistics (e.g., budget) and schedules them instead of tasks. Budget tells you how much reserved CPU time is left for the task to run.

Now let's get into the foreground/background scheduling model. Each runnable VCPU with available budget at the current time operates in foreground mode. When a VCPU depletes its budget it enters background mode, where it may only be scheduled (if the task inside is still runnable) if there are no other runnable VCPUs in foreground mode on the same core. If a VCPU blocks, it gets removed from the core and its mode is ignored. A core is said to be in background mode when all VCPUs on it are in background mode, otherwise the core is in foreground mode. When in foreground mode, the core's local scheduler will use the normal real-time scheduling algorithm (e.g., rate-monotonic, earliest deadline first). The meaning of core's background mode is, at that point, all the real-time requirements have been satisfied and the core is free to run anything it likes. So we can develop smart and novel scheduling algorithms just for background mode (background scheduling), without being constrained by those real-time properties.

Ideas for background scheduling vary a lot. VCPUs can fair-share the CPU time when the core is in background mode (called background CPU time). Or the scheduler can take advantage of the warm cache and schedules VCPUs that already have states in the cache. We can also consider the contention inside shared cache or memory bus and only schedule VCPUs that generate less accesses to them. Through a global coordination amongst all cores, we will be able to stop some cores' background execution should heavy cache/memory contention happen. For people who worry about scalability issues when they see "global coordination", I believe a distributed way to do it is possible as well. So, the execution in background mode meets our first goal, "make use of the idle resources"; the adoption of some contention-aware background scheduling algorithms meets our second goal, "avoid the worst case execution".

Now let's take a look at some potential applications of the foreground/background scheduling model. In real-time systems, the tasks that are strictly periodic would not benefit from the model as they never run in background mode. However, the execution times for tasks' periodic jobs are usually way smaller than the corresponding Cs, leaving cores in background mode for a large percentage of time. Non-periodic tasks (e.g., infotainment systems in cars/airplanes) can greatly boost their performance with this amount of background CPU time. Non-critical periodic tasks can benefit from it as well. For example, a car can use its camera to detect traffic signs and give warnings to its driver. The detection algorithm runs periodically to process new frames. This task is less critical than tasks like brake/steering control and will only run if critical tasks have been completed. The nice thing about this model is that, background CPU time is consumed in a controlled (contention-aware) way. As a result, critical tasks running in foreground mode will only get limited interference from code running in background mode.

Additionally, the imprecise computation model can be combined with the foreground/background scheduling model. The former basically says computation should be done in a way that the more time it executes the more precise (or better quality) the result is. For example, numerical integration algorithms try to approximate the result by refining it iteratively. Let's see another more interesting example. Say a car uses LIDAR and camera for object avoidance. It's mandatory to process the LIDAR data in time to avoid collision. However, if we analyze the scene from the camera data, we can tell the types of objects and predict their moving directions. This may help the car to plan a better route for object avoidance.

Actually implementing the imprecise computation model is a bit tricky. We need two special types of task: foreground (FG) task and background (BG) task. Normal task can run in foreground and background mode; FG task runs only in foreground, while BG task runs only in background. We should create at least one FG task and one BG task, the former executes the mandatory part of the periodic job and the latter is responsible for the optional (improvement) part. They should all be placed inside a single VCPU. Once the FG task completes its job, it wakes up the BG task, passes the original result and waits for the latter. When the BG task is done, it returns the refined result and the control to the FG task, which submits the final result to the system. At some fixed time point before FG task's deadline, if the BG task has not finished its work, then the FG task will be woken up. It finds empty result from the BG task, returns its original result and kills (or resets) the BG task.

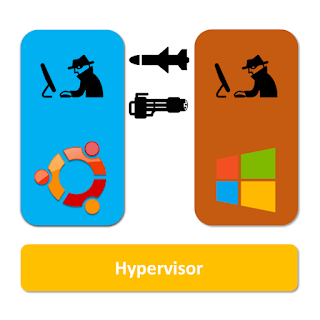

All right, so far I've been talking about the application of this foreground/background model in real-time systems. As the article title suggests, it should be able to be applied to cloud systems as well. In cloud systems, although we don't have real-time requirements, we do have the demand for quality-of-service (QoS). If I pay $100 a month for a VM (say dual-core Intel Xeon is specified), I wouldn't be happy when my VM achieves a performance as good as a single-core Intel Pentium 4. Besides, some cloud vendors provide VMs with low latency support, which definitely need guaranteed services in time. In my opinion, the VM hardware specification can be translated into the real-time properties for VCPUs. The core number is the number of VCPUs; core's performance is converted into C and T (low latency VM has shorter period). So, the foreground execution satisfies the QoS requirements from users. When the physical machines have extra capacity left, they will improve VMs' performance through background execution.

If you are interested in some details of this model, please read this paper: MARACAS: A Real-Time Multicore VCPU Scheduling Framework.

Comments

Post a Comment