Embedded virtualization is NOT server virtualization

Back in the days when virtualization was just invented by IBM, it was intended to solve the problem of single-user operating systems (OS). By creating virtual machines (VM), multiple users could log in their dedicated VMs and perform tasks simultaneously. Today though, single-user OSes have become a history and multi-user OSes are commonly accepted. Many people can log in the same machine without the help of virtualization. Luckily, virtualization technology has found its new roles as well. Firstly, it allows an user to dynamically switching between different OSes. Secondly, it provides an environment for multiple users to share the same physical machine while each having a potentially different software setup, meaning different OSes or incompatible system configurations. One step further, it abstracts the notion of computation resources from physical machines, allowing fixed computation resources (e.g. VMs) to be dynamically allocated and be moved across data centers. This is what we call server virtualization technology.

Among all the concerns for server virtualization, there are two things I want to point out. The first one is resource consolidation, which aims at increasing every server's utilization for cost-efficient cloud business. Typically, we want to pack each server with as many VMs as possible, without noticeable performance degradation of course. So when some VMs are not active, CPUs, DRAMs and IO devices are still being used by other VMs instead of sitting idle. Another concern is security. Here I don't want to talk about the traditional network attacks. Think about multiple users sharing the same physical machine, each performing certain jobs. It wouldn't be nice if user A's job is terminated whenever user B crashes his VM. Similarly, it's not acceptable if user A can steal user B's private data by taking advantage of the machine sharing. More accurately, it's about security between users on the same machines.

These two design goals are pretty important for server domain. However, as the title suggests, we are discussing embedded virtualization in this article. So, my question is, inside embedded domain, do we still care that much about resource consolidation and security?

To answer this question, first we need to understand why people want virtualization in embedded systems. From what I can see, the major benefit is the combination of real-time services and feature-rich dev environment. For real-time services alone, we can adopt a typical real-time OS (RTOS), which usually has less features. For rich features like all sorts of tools and libraries, we can rely on general-purpose OSes (GPOS, e.g. Windows, Linux-distros). Imagine some use case like car driving (be it autonomous or not), we definitely need the strict timing for crucial driving controls; at the same time, for the infotainment system, we want to have nice support for developing user-friendly graphical interface, multimedia functionality, etc.. It would be fine if we have one chip for running timing-critical tasks and another chip for hosting infotainment system. However, current trend seems to be consolidating multiple chips into a single powerful multicore chip, for cost saving, performance boosting and smaller form factors[1]. In this case, virtualization is much needed for hosting both an RTOS and a GPOS.

Now back to our question. First, do we still care that much about consolidation? My answer is, we care, but not as much as in the server domain. The fact that people want to switch to multicore indicates the desire for resource consolidation. However, knowing the state of the art in real-time system research, I believe hard real-time execution on multicore platform remains an extremely difficult and unsolved problem. Hardware resources shared among cores are a major source of performance interference to real-time services. A thread that should seemingly run in isolation of threads on other cores may experience timing unpredictability due to unforeseen cache line evictions, misses, and reloads. Besides, cache-line fills require accesses to a shared memory bus (or system bus). This could lead to one or more co-running threads being forced to stall while a bus transaction is performed for another thread. So, my point is, before we can talk about fully loading every core, we should consider how to add certain degree of parallelism without breaking the system or turning it unreliable. In another word, lower CPU utilization is acceptable (some server virtualization experts would laugh at me😏).

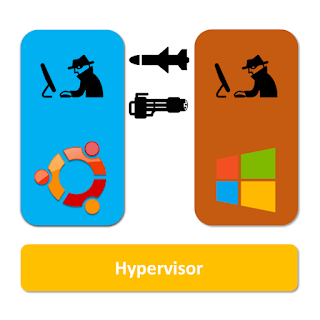

There is a second part of my question: do we care about security on the same machines? I would say yes, although the security models are different between the two domains. On the server side, complete strangers may be sharing the same physical machine. Since every user can potentially attack others, everyone is treated with the same security level, from the view of the hypervisor (or virtual machine monitor). Then protection and isolation is enforced bidirectional among VMs. For embedded systems though, they are not usually (if at all) designed to be shared by random users. Most likely, there is a single user for all VMs. Virtualization is used to achieve functional separation of an entire system. For example, in the car case, one VM is handling driving controls while another is hosting infotainment system. We are talking about mixed-criticality systems here where the systems are composed of a mixture of safety-critical and non-critical components. With virtualization, each VM hosts a different component, thus having a different criticality/security level in the system. The enforcement of protection and isolation is unidirectional: VMs with higher criticality should not be compromised by or have their information leaked to VMs of lower criticality. This security model shouldn't be too hard to understand. Back to our favorite car example, the infotainment component may have a large portion of unverified code base, vulnerable to bug-related crashes. Also, it could have various communication channels to the outside world (e.g., LTE, WiFi and Bluetooth), exposing it to countless network attacks.

All right, that's my answer to the question. As we are moving from server virtualization to embedded virtualization, some of the basic problem assumptions have changed. Consequently, we should not think like "server virtualization experts" when designing the next generation embedded systems. Embedded virtualization is not and should not be server virtualization.

If you are interested in some ideas of embedded virtualization design, please read this paper: vLibOS: Babysitting OS Evolution with a Virtualized Library OS.

Among all the concerns for server virtualization, there are two things I want to point out. The first one is resource consolidation, which aims at increasing every server's utilization for cost-efficient cloud business. Typically, we want to pack each server with as many VMs as possible, without noticeable performance degradation of course. So when some VMs are not active, CPUs, DRAMs and IO devices are still being used by other VMs instead of sitting idle. Another concern is security. Here I don't want to talk about the traditional network attacks. Think about multiple users sharing the same physical machine, each performing certain jobs. It wouldn't be nice if user A's job is terminated whenever user B crashes his VM. Similarly, it's not acceptable if user A can steal user B's private data by taking advantage of the machine sharing. More accurately, it's about security between users on the same machines.

These two design goals are pretty important for server domain. However, as the title suggests, we are discussing embedded virtualization in this article. So, my question is, inside embedded domain, do we still care that much about resource consolidation and security?

To answer this question, first we need to understand why people want virtualization in embedded systems. From what I can see, the major benefit is the combination of real-time services and feature-rich dev environment. For real-time services alone, we can adopt a typical real-time OS (RTOS), which usually has less features. For rich features like all sorts of tools and libraries, we can rely on general-purpose OSes (GPOS, e.g. Windows, Linux-distros). Imagine some use case like car driving (be it autonomous or not), we definitely need the strict timing for crucial driving controls; at the same time, for the infotainment system, we want to have nice support for developing user-friendly graphical interface, multimedia functionality, etc.. It would be fine if we have one chip for running timing-critical tasks and another chip for hosting infotainment system. However, current trend seems to be consolidating multiple chips into a single powerful multicore chip, for cost saving, performance boosting and smaller form factors[1]. In this case, virtualization is much needed for hosting both an RTOS and a GPOS.

Now back to our question. First, do we still care that much about consolidation? My answer is, we care, but not as much as in the server domain. The fact that people want to switch to multicore indicates the desire for resource consolidation. However, knowing the state of the art in real-time system research, I believe hard real-time execution on multicore platform remains an extremely difficult and unsolved problem. Hardware resources shared among cores are a major source of performance interference to real-time services. A thread that should seemingly run in isolation of threads on other cores may experience timing unpredictability due to unforeseen cache line evictions, misses, and reloads. Besides, cache-line fills require accesses to a shared memory bus (or system bus). This could lead to one or more co-running threads being forced to stall while a bus transaction is performed for another thread. So, my point is, before we can talk about fully loading every core, we should consider how to add certain degree of parallelism without breaking the system or turning it unreliable. In another word, lower CPU utilization is acceptable (some server virtualization experts would laugh at me😏).

|

| Server |

|

| Embedded System |

If you are interested in some ideas of embedded virtualization design, please read this paper: vLibOS: Babysitting OS Evolution with a Virtualized Library OS.

Comments

Post a Comment