Why you should consider using memory latency metric instead of memory bandwidth

On multicore platforms with shared memory, the performance of memory bus is critical to the overall performance of the system. Heavy contention on the memory bus leads to unpredictable task completion time in real-time systems and throughput fluctuation in server systems. Currently, people monitor the memory bus contention by looking at the whole system's memory bandwidth (e.g., bytes/sec). When the observed bandwidth usage exceeds a certain threshold (which is hardware dependent), the system is considered under memory bus contention. While memory bandwidth metric has been widely used, both in academia and industry, I would argue that it is not accurate enough to identify contention. Well, if you use something like Valgrind to profile your application, you certainly get accurate information on almost everything. But here we are talking about online performance monitoring, where you don't want to dramatically slow down your application in production environment. So most likely, you would want to use processor's performance monitoring unit (PMU) for this purpose.

Let me start by a brief introduction to the DRAM structure (part of it from [1]). Modern DRAM system consists of a set of ranks, each has multiple DRAM chips. Each DRAM chip has a narrow data interface (e.g., 8 bits), so the DRAM chips in the same rank are combined to widen the width of the data interface (e.g., 8 bits x 8 chips = 64 bits data bus). A DRAM chip consists of multiple DRAM banks and memory requests to different banks can be serviced in parallel. Each DRAM bank has a two-dimensional array of rows and columns of memory locations. To access a column in the array, the entire row containing the column first needs to be loaded to a row-buffer. Each bank has one row-buffer that contains at most one row at a time. After loading the row, subsequent accesses to the same row will be buffer hits, accessing the row-buffer with shortened latency. An access to a different row (buffer miss) causes the row-buffer to be reloaded and takes more time to complete the request.

So, you can see two issues from above that affect the accuracy of memory bandwidth metric. The first one is bank-level parallelism. While separate banks are accessible in parallel, requests to the same bank are serialized. Therefore, when you get some bandwidth data, you may not know whether the memory bus is heavily contended or not. It depends on which memory bank each request goes to. The second issue is the row-buffer design, since servicing sequential accesses (buffer hit) is faster than servicing random accesses (buffer miss) within the same bank. For the same amount of memory requests, it's possible that memory bus is congested if they cause continuous buffer misses, or bus traffic is smooth otherwise. Actually, there is another issue: read/write turnaround time. This means switching between memory read and write can cause extra stalls on the bus. But current memory controllers seem to have some request reordering policies that alleviate this problem.

All right, you are all set at this point. With some proper latency threshold settings, you will be able to easily and accurately monitor the memory bus contention and possibly do some clever resource management. If you are interested in further details, please read this paper: MARACAS: A Real-Time Multicore VCPU Scheduling Framework.

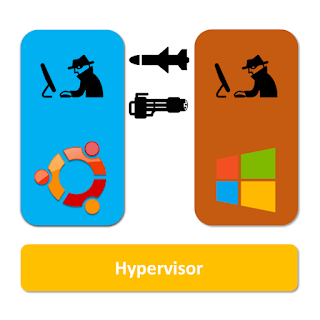

Other than those hardware-related issues, here is a software issue. Look at this case where two tasks, task1 and task2, are running periodically with period T. The above figure shows the situation where the tasks run concurrently on separate cores until t, when they finish current execution. Both tasks are then blocked until T. Because of the idle time [t, T] (can be potentially longer than t), the system may not be considered under heavy bus contention. However, when the tasks are executing, they may generate a large number of memory requests, leading to bus congestion in [0, t]. In other words, we think the bus traffic is smooth, but every memory access is experiencing terrible delay. We call this phenomenon the Sync Effect, which occurs when two or more cores have overlapping idle times.

To address these existing problems, I'm proposing to monitor memory bus contention through the average memory request latency metric, or latency metric. Unlike bandwidth, latency is directly related to application performance, as it tells you the response time for memory requests. Getting latency data is a bit tricky though. There are two ways you can achieve this. The first one is to set up a periodic thread. In every period, it reads data from a fixed location of uncacheable memory and times the whole process. With the total time and the amount of data read, average memory request latency is just a simple division. As you shorten the period, latency is more accurate, but you get more overhead on memory bus and CPU. Luckily, if you are using Intel's fairly new processors, you can get latency data in hardware (PMU). Intel Sandy Bridge and more recent processors provide two uncore performance monitoring events:

- UNC_ARB_TRK_REQUEST.ALL;

- UNC_ARB_TRK_OCCUPANCY.ALL.

All right, you are all set at this point. With some proper latency threshold settings, you will be able to easily and accurately monitor the memory bus contention and possibly do some clever resource management. If you are interested in further details, please read this paper: MARACAS: A Real-Time Multicore VCPU Scheduling Framework.

Comments

Post a Comment